In the rapidly evolving landscape of artificial intelligence, neurons in machine learning stand as the fundamental building blocks of our most advanced AI systems. These artificial neurons, inspired by the intricate networks within the human brain, form the backbone of neural networks that power everything from voice assistants to autonomous vehicles. As we delve into the world of neurons in machine learning, we’ll uncover how these simple yet powerful units process information, learn patterns, and ultimately enable machines to perform tasks that once seemed exclusive to human intelligence.

Artificial neural networks, deep learning, and cognitive computing have revolutionized the field of AI, pushing the boundaries of what’s possible in technology. By understanding these digital neurons, we gain insight into both the potential and limitations of modern AI, offering a glimpse into a future where machines may rival or even surpass human capabilities in certain tasks.

Understanding Neurons in Machine Learning

The Biological Inspiration

To understand artificial neurons, let’s first look at their biological counterparts. In the human brain, neurons are specialized cells that transmit information through electrical and chemical signals. They connect to form complex networks, allowing us to process information, learn, and make decisions.

Similarly, in machine learning, artificial neurons are computational units designed to mimic the basic functions of biological neurons. They receive inputs, process them, and produce outputs, forming the foundation of artificial neural networks.

From Perceptrons to Modern Neurons

The journey of artificial neurons began with the perceptron, introduced by Frank Rosenblatt in 1958. This simple model laid the groundwork for more complex neural network architectures we use today.

class Perceptron:

def __init__(self, input_size, learning_rate=0.1):

self.weights = np.zeros(input_size)

self.bias = 0

self.learning_rate = learning_rate

def activate(self, x):

return 1 if np.dot(self.weights, x) + self.bias > 0 else 0

def train(self, X, y, epochs=100):

for _ in range(epochs):

for xi, target in zip(X, y):

prediction = self.activate(xi)

update = self.learning_rate * (target - prediction)

self.weights += update * xi

self.bias += update

This simple code example illustrates the basic concept of a perceptron, the predecessor to modern artificial neurons.

How Neurons Work in Artificial Neural Networks

The Anatomy of an Artificial Neuron

An artificial neuron consists of several key components:

- Inputs: Data received from other neurons or external sources

- Weights: Values that determine the strength of connections between neurons

- Bias: An additional parameter that allows the neuron to shift its activation function

- Activation function: A mathematical operation that determines the neuron’s output

The Process of Information Flow

- Input Reception: The neuron receives multiple inputs, each associated with a weight.

- Weighted Sum: It calculates the sum of all inputs multiplied by their respective weights, plus the bias.

- Activation: The weighted sum is passed through an activation function, which determines the neuron’s output.

- Output: The result is then passed to the next layer of neurons or as the final output of the network.

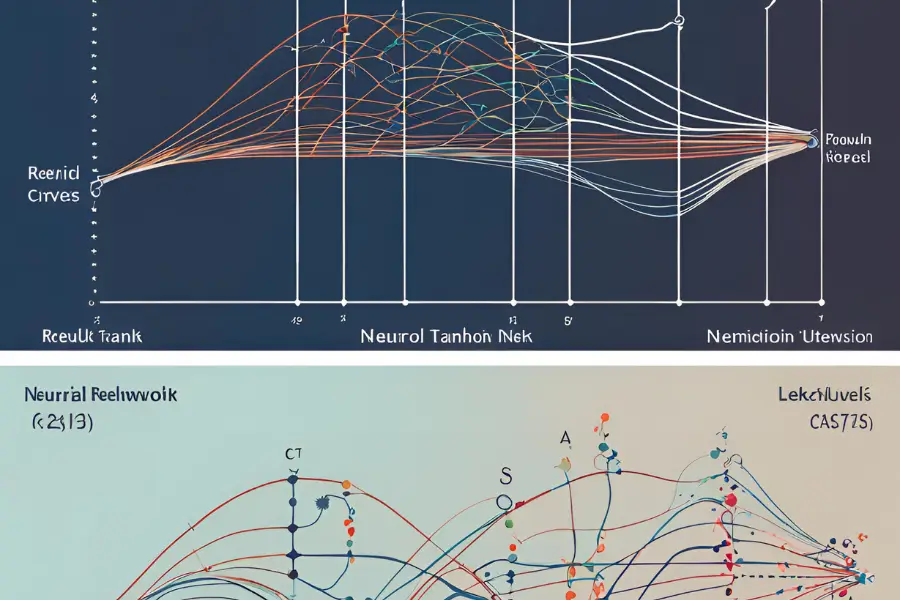

Activation Functions

Activation functions introduce non-linearity into the network, allowing it to learn complex patterns. Common activation functions include:

- Sigmoid: Outputs values between 0 and 1

- ReLU (Rectified Linear Unit): Outputs the input if positive, otherwise 0\

- Tanh: Outputs values between -1 and 1

import numpy as np

def sigmoid(x):

return 1 / (1 + np.exp(-x))

def relu(x):

return np.maximum(0, x)

def tanh(x):

return np.tanh(x)

These functions play a crucial role in determining how information flows through the network and what the neuron learns.

Types of Neural Network Architectures

Feedforward Neural Networks

The simplest form of artificial neural network, where information moves in one direction from input to output.

Convolutional Neural Networks (CNNs)

Specialized for processing grid-like data, such as images. CNNs have shown remarkable performance in image recognition tasks. For instance, a study published in Nature Medicine reported that a CNN achieved 95% accuracy in detecting skin cancer, outperforming many dermatologists.

Recurrent Neural Networks (RNNs)

Designed to work with sequential data, making them ideal for tasks like natural language processing and time series prediction. A study by JP Morgan found that RNNs achieved an accuracy of 76% in predicting stock market trends, significantly outperforming traditional statistical models.

Real-World Applications of Neural Networks

Image Recognition and Computer Vision

The MNIST dataset, a collection of handwritten digits, is often used to demonstrate the power of neural networks in image recognition. Using a simple neural network, researchers have achieved over 99% accuracy in recognizing these handwritten digits, showcasing the potential of AI in tasks that require visual pattern recognition.

Natural Language Processing

Neural networks have revolutionized language processing tasks. For example, Google’s BERT (Bidirectional Encoder Representations from Transformers) model has significantly improved the accuracy of language understanding in search queries, leading to more relevant search results for users.

Predictive Analytics

In the financial sector, neural networks are used for fraud detection, credit scoring, and market prediction. For instance, PayPal uses neural networks to detect fraudulent transactions, reducing fraud rates to just 0.32% of total payment volume.

Ethical Considerations and Future Developments

Bias in AI Systems

As neural networks become more prevalent in decision-making systems, addressing bias becomes crucial. The AI Now Institute has reported instances of facial recognition systems showing lower accuracy for women and people of color, highlighting the need for diverse training data and regular audits of AI systems.

Privacy Concerns

The ability of neural networks to process and analyze vast amounts of data raises important privacy questions. It’s essential to develop AI systems that respect individual privacy while still providing valuable insights.

Neuromorphic Computing

Looking to the future, IBM’s neuromorphic computing chips aim to mimic the brain’s architecture more closely. These chips have shown the potential to be up to 100 times more energy-efficient than traditional processors, paving the way for more sustainable AI systems.

Conclusion

As we’ve explored the intricate world of neurons in machine learning, it’s clear that these digital synapses are more than just lines of code – they’re the sparks of artificial cognition that are reshaping our technological landscape. From the basic principles of neural networks to their diverse applications and ethical implications, neurons in machine learning continue to push the boundaries of what’s possible in AI.

As we stand on the brink of new breakthroughs, from more efficient neuromorphic hardware to more sophisticated learning algorithms, the journey of artificial neurons is far from over. By understanding and harnessing the power of these fundamental units, we’re not just programming machines – we’re nurturing the growth of artificial minds that may one day rival or even surpass our own in capabilities. The future of neurons in machine learning is not just a technological frontier; it’s a new chapter in the story of intelligence itself.

Glossary of Key Terms

- Artificial Neural Network (ANN): A computing system inspired by biological neural networks.

- Deep Learning: A subset of machine learning based on artificial neural networks with multiple layers.

- Backpropagation: An algorithm for training neural networks by adjusting weights based on the error in output.

- Activation Function: A function that determines the output of a neural network node.

- Convolutional Neural Network (CNN): A type of neural network particularly effective for image processing tasks.

- Recurrent Neural Network (RNN): A type of neural network designed to work with sequential data.

- Neuroplasticity: The ability of neural networks to change and adapt in response to new information.

FAQs

Q: What’s the difference between traditional machine learning and deep learning?

A: Traditional machine learning often requires manual feature engineering, while deep learning with neural networks can automatically learn relevant features from raw data.

Q: Can neural networks think like humans?

A: While neural networks can perform complex tasks, they don’t “think” in the same way humans do. They excel at pattern recognition and data processing but lack human-like reasoning and consciousness.

Q: How can I start learning about neural networks?

A: Begin with online courses on platforms like Coursera or edX. Practice with libraries like TensorFlow or PyTorch, and work on small projects to gain hands-on experience.

Q: Are neural networks only used in computer science?

A: No, neural networks have applications in various fields including medicine, finance, environmental science, and more.

Q: What are the limitations of current neural network technology?

A: Current limitations include the need for large amounts of training data, difficulty in explaining decision-making processes (the “black box” problem), and high computational requirements for complex models.